Wrap all of this into a single method, so further on GetHtmlContent(string URL) will be called. Lastly, convert responseStream to MemoryStream to get its byte array, which if read as a stream will be HTML content. ResponseStream = new DeflateStream(responseStream, CompressionMode.Decompress) ResponseStream = new GZipStream(responseStream, CompressionMode.Decompress) Įlse if (response.ContentEncoding?.IndexOf("deflate", StringComparison.InvariantCultureIgnoreCase) >= 0) if (response.ContentEncoding?.IndexOf("gzip", StringComparison.InvariantCultureIgnoreCase) >= 0) Var responseStream = response.GetResponseStream() Īfter adding AcceptEncoding header and indicating that you’ve accepted gzip and deflate, check if the content is compressed in any way, and decompress it if so. var response = (HttpWebResponse)request.GetResponse()

(HttpRequestHeader.AcceptEncoding, "gzip,deflate") Īfter adding some headers, request a response and get a response stream. (HttpRequestHeader.AcceptLanguage, "en-us,en q=0.5") Request.Accept = "text/html,application/xhtml+xml,application/xml q=0.9,image/webp,*/* q=0.8" Usually providing some identity of a request and what the response should look like: request.UserAgent = "Mozilla/5.0 (Windows NT 10.0 rv:68.0) Gecko/20100101 Firefox/68.0" The website might check for request headers and refuse to serve content if the request doesn’t meet its requirements. However, after executing the request, you might not receive what you've expected.

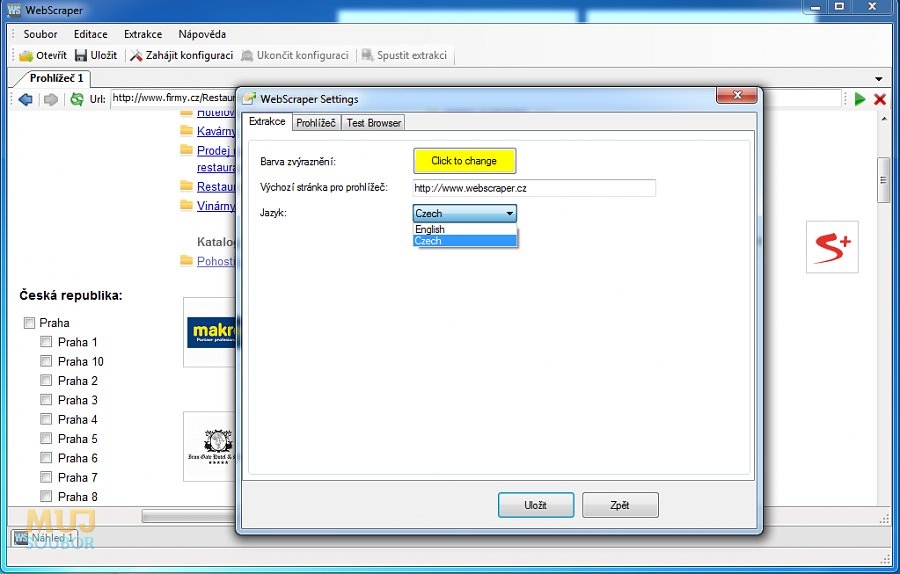

#WEBSCRAPER SCRAPER WINDOW CLOSED DOWNLOAD DATA CODE#

You could just use the above code and be done. Using HTTP requestsĬreate a request using the following code: var request = (HttpWebRequest)WebRequest.Create(url) I'll be using these to test different ways of extracting data. Page with a button, which appears after time out The repository also contains a sample website (ASP.NET Core MVC application) which includes three pages: The following examples can be cloned as a Git repository from. These libraries haven't changed much in a while and should also work on. NET core 3.1 are used for these examples. Setting up the demo The environmentĬ# and.

Since CSS styles lie on top of HTML structure, CSS selectors are somewhat similar to XPath and are a way to select elements using a string pattern. Each has a structure to them and a query that can be written to follow that structure.

XPath is a query language used for selecting elements in documents such as XML and HTML. Two commonly used ways of parsing content is via XPath and CSS. In this instance, using a browser eliminates some of the work when getting web content. However, many websites rely heavily on JavaScript and might not display some content if it is not executed. For example, if you're trying to extract text from a web page and download it as plain text, a simple HTTP request might suffice. Web browsers sometimes use unnecessary resources. They are so for a good reason to account for rendering styles and executing scripts on behalf of web pages, changing how each act and are displayed to be easily readable and usable. Alternately, using web browsers, such as Firefox and Chrome, is slower. Web browsers-as well as the pros and cons of each.ĭownloading web content with HTTP requests and web browsersĪs most everything is connected to the Internet these days, you will probably find a library for making HTTP requests in any programming language. In this blog, I’ll cover two ways of scraping and crawling the web for data using: This information can be a great resource to build applications around, and knowledge of writing such code can also be used for automated web testing. How and where can that information be used? There are as many answers as there are web sites online, and more. However, they perform different functions. The terms are sometimes used interchangeably, and both deal with the process of extracting information. An application performs both of these tasks, since finding new links entails scraping a web page. Web crawling is an iterative process of finding web links and downloading their content. Processing a web page and extracting information out of it is web scraping. Performing the task of pulling perspective code is known as web crawling and web scraping. Web browser display pages let users easily navigate different sites and parse information. The Internet contains a vast amount of information and uses web browsers to display information in a structured way on web pages.

0 kommentar(er)

0 kommentar(er)